Mix in Rust with Java (or Kotlin!)

This article is part of our Rust Interop Guide.

Contents

JNI

The Java Native Interface (JNI) is the original built-in method to interact with native libraries from Java. Native libraries are libraries which do not run in the JVM but are instead built for a specific operating system using a language like C, C++ or Rust. JNI provides an interface for such native libraries to interact with the Java environment to access Java data structures. The JNI crate provides Rust types for this Java interface, which makes working with JNI in Rust very convenient!

Double to string

We will take a function that turns doubles (also known as f64 in Rust) into strings as a running example throughout this blog post. Such a function should for example turn the value 3.14 into the string "3.14". In Java, we can do this by calling Double.toString, but let's see if we can do it faster by integrating Rust in our Java project.

Using the JNI crate, we expose a doubleToStringRust function from our Rust code by marking it as extern "C":

#[no_mangle]

pub extern "C" fn Java_golf_tweede_JniInterface_doubleToStringRust(

env: JNIEnv,

_class: JClass,

v: jdouble,

) -> jstring {

env.new_string(v.to_string()).unwrap().into_raw()

}

Some observations:

- The function name is in a specific format that tells JNI in which class the function should be exposed. In this case, the function is exposed in the

JniInterfaceclass in thegolf.tweedepackage. - While the

jdoubletype is simply an alias forf64, thejstringtype is a Java object, for which the JNI environmentenvprovides a convenient constructor function.

We compile this Rust code into a dynamic library by configuring the crate type as a "cdylib" in Cargo.toml:

[package]

name = "java-interop"

version = "0.1.0"

edition = "2021"

[lib]

crate-type = ["cdylib"]

After building it with cargo build --release, you should see a libjava_interop.so appear in the target/release folder if you are on Linux. On Windows, the Rust library will be compiled into a .dll file instead of a .so file, and on MacOS it will be a .dylib file.

Now we can call our Rust function by loading the dynamic library via System.load, and declaring the doubleToStringRust function as native in the JniInterface class in our golf.tweede package:

package golf.tweede;

import java.nio.file.Path;

import java.nio.file.Paths;

public class JniInterface {

public static native String doubleToStringRust(double v);

static {

Path p = Paths.get("src/main/rust/target/release/libjava_interop.so");

System.load(p.toAbsolutePath().toString()); // load library

}

public static void main(String[] args) {

System.out.println(doubleToStringRust(0.123)); // this prints "0.123"!

}

}

Note that this does mean that it expects our native library to be in the specified folder. Alternatively, you could use System.loadLibrary("java_interop") to load the native library from one of the folders in java.library.path, which on Linux by default contains /usr/java/packages/lib, /usr/lib64, /lib64, /lib and /usr/lib. If you want to package your Java project into a JAR, you still have to make sure that the library is in the correct place by either including it in the JAR and extracting the library before loading it, or by installing it separately.

Measuring performance

To see how our dynamically linked Rust implementation compares to Java's Double.toString, we will run some benchmarks using JMH.

We will create functions annotated with @Benchmark for the functionality we want to test:

@Benchmark

public String doubleToStringJavaBenchmark(BenchmarkState state) {

return Double.toString(state.value);

}

@Benchmark

public String doubleToStringRustBenchmark(BenchmarkState state) {

return doubleToStringRust(state.value);

}

We supply the input value via a BenchmarkState class to make sure that the functions are not optimized by the Java compiler when using a constant input value:

@State(Scope.Benchmark)

public static class BenchmarkState {

public double value = Math.PI;

}

If we now build the project with mvn clean verify, we can run the benchmarks using java -jar target/benchmarks.jar -f 1. Here are the results:

Benchmark Mode Cnt Score Error Units

Main.doubleToStringJavaBenchmark thrpt 5 29921713.259 ± 576120.424 ops/s

JniInterface.doubleToStringRustBenchmark thrpt 5 5401499.220 ± 23625.065 ops/s

If we look at the score, we can see that the Java-only function is almost 6 times faster than the JNI Rust function. This difference in performance is most likely caused by the added overhead from interacting with a native library.

Speeding things up

Let's see if we can do better. First off, instead of using Rust's standard to_string implementation, let's use the Ryu crate, which uses a fancy algorithm to turn floating point numbers into strings up to 5 times faster!

Here's a new Rust JNI function which uses Ryu to convert doubles to strings:

#[no_mangle]

pub extern "C" fn Java_golf_tweede_JniInterface_doubleToStringRyu(

env: JNIEnv,

_class: JClass,

value: jdouble,

) -> jstring {

let mut buffer = ryu::Buffer::new();

env.new_string(buffer.format(value)).unwrap().into_raw()

}

Now let's see how this Ryu method compares in the benchmark. We turned the results into a nice bar chart for easy comparison:

That's about 50% faster than our original Rust function, but it does not yet come anywhere near the performance of the Java-only benchmark.

Converting many doubles at once using arrays

There still is too much overhead from interacting with our native library. We can diminish this overhead by doing more work per call on the Rust side. Instead of sending just one double every time we call the function, we can send an array containing many doubles at once, and have the function combine all the doubles into a string with spaces between the values. While this is a bit of a synthetic example which is not necessarily very useful in practice, it will allow us to demonstrate how performance improves with larger workloads.

On the Java side, we define a function doubleArrayToStringRyu:

public static native String doubleArrayToStringRyu(double[] v);

In our Rust library, we implement it as follows:

#[no_mangle]

pub extern "C" fn Java_golf_tweede_JniInterface_doubleArrayToStringRyu(

mut env: JNIEnv,

_class: JClass,

array: JDoubleArray,

) -> jstring {

let mut buffer = ryu::Buffer::new();

let len: usize = env.get_array_length(&array).unwrap().try_into().unwrap();

let mut output = String::with_capacity(10 * len);

{

let elements = unsafe {

env.get_array_elements_critical(&array, ReleaseMode::NoCopyBack)

.unwrap()

};

for v in elements.iter() {

output.push_str(buffer.format(*v)); // add number to output string

output.push(' ');

}

}

env.new_string(output).unwrap().into_raw()

}

Some observations:

- The

double[]becomes aJDoubleArray, for which we must use theenvto retrieve its length and its elements. As this array is only used as an input, we useReleaseMode::NoCopyBackto tell JNI that it does not need to copy back any changed values to the Java side. - We create an output string with a large capacity to make sure that it won't have to reallocate as much when pushing many characters to it.

To benchmark this new function, we add an array of 1 million doubles to our benchmarking state:

@State(Scope.Benchmark)

public static class BenchmarkState {

public double value = Math.PI;

public double[] array = new double[1_000_000];

@Setup

public void setup() {

for (int i = 0; i < array.length; i++) {

array[i] = i / 12f;

}

}

}

And now we define benchmarks for our Rust JNI function and a Java-only function for comparison:

@Benchmark

public String doubleArrayToStringRyuBenchmark(BenchmarkState state) {

return doubleArrayToStringRyu(state.array);

}

@Benchmark

public String doubleArrayToStringJavaBenchmark(BenchmarkState state) {

return String.join(" ", DoubleStream.of(state.array).mapToObj(Double::toString).toArray(String[]::new));

}

Let's see how they perform:

Look at that! Now our Java JNI Rust function is almost twice as fast as the Java-only function! 😎

Of course, it might also be possible to further optimize the Java-only code. However, it does show that under certain circumstances, it can be worth it to use native libraries for performance despite the added overhead of calling native functions.

JNR-FFI

Now, let's look at a different method of calling native libraries from Java: JNR-FFI. Unlike JNI, JNR-FFI uses a generic C interface to interact with native libraries. This means we don't have to write JNI-specific code on the Rust side, we don't have to include any specific Java class and package names in our function names, and JNR-FFI will take care of converting between C types and Java types.

JNR-FFI is similar to JNA, another Java library for interacting with native libraries. However, JNR-FFI is supposedly more modern and provides superior performance, so we decided to try out JNR-FFI instead of JNA.

First, let's add JNR-FFI to our project by including it as a Maven dependency in pom.xml (for Rust users: pom.xml for Maven is similar to Cargo.toml, but in XML format):

<dependency>

<groupId>com.github.jnr</groupId>

<artifactId>jnr-ffi</artifactId>

<version>2.2.17</version>

</dependency>

Now, let's implement the doubleToStringRust function in Rust with a generic C interface instead of the JNI interface we used before:

use std::ffi::{c_char, c_double, CString};

#[no_mangle]

pub extern "C" fn doubleToStringRust(value: c_double) -> *mut c_char {

CString::new(value.to_string()).unwrap().into_raw()

}

Some observations:

- We now use C types from

std::ffiinstead of the Java JNI types. - To return a string via the C interface, we first create a C string, which is then returned as a

charpointer usinginto_raw.

On the Java side, we first define an interface with the functions that our Rust library implements:

public interface RustLib {

String doubleToStringRust(double value);

}

And then we load the library using JNR's LibraryLoader:

public static RustLib lib;

static {

System.setProperty("jnr.ffi.library.path", "src/main/rust/target/release");

lib = LibraryLoader.create(RustLib.class).load("java_interop"); // load library

}

Note that here we only tell it to load "java_interop" instead of the full filename "libjava_interop.so". JNR-FFI will automatically turn this into the full filename itself. This allows it to choose between a .so, .dll, or .dylib file extension based on the platform that it is running on, making it easier to add cross-platform compatibility!

Oh no! Memory leaks!

We went ahead and also added the Ryu and the array functions from before to our new interface. The array function is now implemented as doubleArrayToStringRyu(array: *const c_double, len: usize) in Rust, and we use std::slice::from_raw_parts to construct a nice &[f64]:

#[no_mangle]

pub unsafe extern "C" fn doubleArrayToStringRyu(array: *const c_double, len: usize) -> *mut c_char {

let slice = std::slice::from_raw_parts(array, len);

...

CString::new(output).unwrap().into_raw()

}

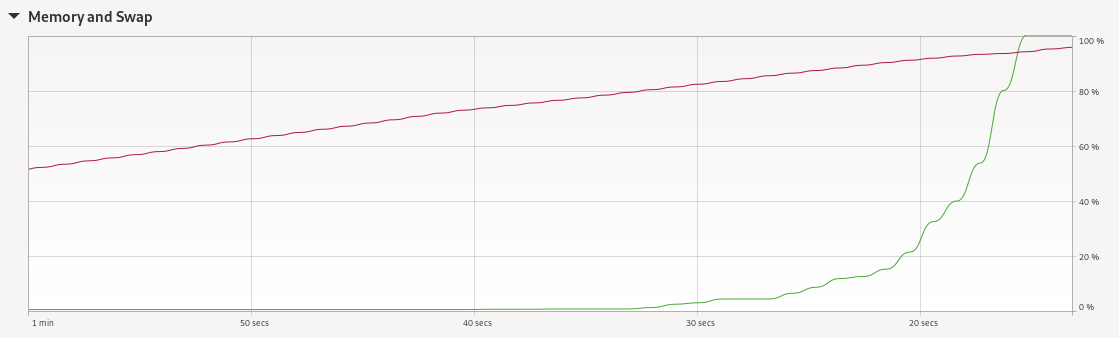

After setting up and running the benchmarks for these functions, we noticed something strange: these benchmarks slow down after a couple of iterations. If we look at our memory usage during the test, we can see why:

The red line shows total memory usage, so it seems like we're running out of memory!

If we look at the documentation of CString::into_raw, it explains that we have to manually call CString::from_raw to free the memory after using into_raw. Currently, we do not do this, which means the memory is never freed, which explains our memory leak.

To fix this, we add a function to our native Rust library which frees a CString using from_raw:

#[no_mangle]

pub unsafe extern "C" fn freeString(string: *mut c_char) {

let _ = CString::from_raw(string);

}

To call this function, we have to update our interface on the Java side. Previously, our functions simply returned Java strings. This meant that JNR-FFI would automatically convert the C char pointers into Java strings. However, to free the C strings properly, we need access to the original C char pointers. So, we make the functions in the Java interface return pointers instead of strings:

public interface RustLib {

Pointer doubleToStringRust(double value);

Pointer doubleToStringRyu(double value);

Pointer doubleArrayToStringRyu(double[] array, int len);

void freeString(Pointer string);

}

To get the strings from these pointers, we can write a convenient helper function that will also call freeString for us:

public static String pointerToString(Pointer pointer) {

String string = pointer.getString(0);

lib.freeString(pointer); // frees the original C string

return string;

}

Using this helper function, we no longer leak any memory!

Performance comparison

Now that we've fixed the memory issues, let's see how performance compares between JNR-FFI and JNI. Here are the results we got for the doubleToString functions:

And here are the results for the doubleArrayToString functions:

As you can see, JNR-FFI seems to be slightly slower than JNI. While it is nice that JNR-FFI can interact with the C interface without needing to write JNI-specific code, it does come at a cost of some added overhead.

Project Panama

The last method we will discuss is Project Panama. Project Panama is the newest way to interact with native libraries from Java that is being developed by OpenJDK. As it's still in development, it requires a recent JDK version; we are using OpenJDK 23 for this project. Similar to JNR-FFI, it uses a generic C interface. However, unlike JNR-FFI, Project Panama can automatically generate the interface on the Java side.

To generate the Java bindings for our Rust code, we first generate a C header file using cbindgen. We can then use jextract to generate the Java interface based on the C headers.

You can install cbindgen with cargo:

cargo install --force cbindgen

The easiest way to install jextract is with SDKMAN:

sdk use java 23-open

sdk install jextract

Now we can run cbindgen in our Rust project to generate a java_interop.h header file:

cbindgen --lang c --output bindings/java_interop.h

And then we can run jextract in our Java project with the following command to generate Java bindings:

jextract \

--include-dir src/main/rust/bindings/ \

--output src/main/java \

--target-package golf.tweede.gen \

--library :src/main/rust/target/release/libjava_interop.so \

src/main/rust/bindings/java_interop.h

Alternatively, we can automatically generate our bindings by adding a build.rs script to our Rust project that will call cbindgen and jextract:

fn main() {

// Create C headers with cbindgen

let crate_dir = std::env::var("CARGO_MANIFEST_DIR").unwrap();

cbindgen::Builder::new()

.with_crate(crate_dir.clone())

.with_language(cbindgen::Language::C)

.generate()

.unwrap()

.write_to_file("bindings/java_interop.h");

// Create Java interface with JExtract

let java_project_dir = std::path::Path::new(&crate_dir).ancestors().nth(3).unwrap();

std::process::Command::new("jextract")

.current_dir(java_project_dir)

.arg("--include-dir")

.arg("src/main/rust/bindings/")

.arg("--output")

.arg("src/main/java")

.arg("--target-package")

.arg("golf.tweede.gen")

.arg("--library")

.arg(":src/main/rust/target/release/libjava_interop.so")

.arg("src/main/rust/bindings/java_interop.h")

.spawn()

.unwrap();

}

If we now build our Rust project, we will see a java_interop.h file appear in the bindings folders, which contains the C header definitions for our Rust functions. We will also see a bunch of Java files being generated in src/main/java/golf/tweede/gen. The main file we are interested in here is java_interop_h.java, which contains the Java bindings for our Rust functions in addition to a bunch of other native library bindings.

Since Project Panama uses a C interface, we have to make sure to free our strings, otherwise we will leak memory like we saw with JNR-FFI. For this reason, the bindings from Project Panama return a memory segment instead of a string. We will again write a helper function to get the string out of this memory segment and free the original C string by calling our freeString method:

private static String segmentToString(MemorySegment segment) {

String string = segment.getString(0);

java_interop_h.freeString(segment);

return string;

}

public static String doubleToStringRust(double value) {

return segmentToString(java_interop_h.doubleToStringRust(value));

}

public static String doubleToStringRyu(double value) {

return segmentToString(java_interop_h.doubleToStringRyu(value));

}

Let's see how Project Panama compares in the benchmarks:

While it is still much slower than not using a native library, Project Panama provides significantly better performance compared to JNI and JNR-FFI!

Using arrays: sharing memory

For our other benchmark, we want to share an array of doubles with our Rust code. To do this, we have to allocate our own off-heap native memory segment using the Foreign Function & Memory (FFM) API. First, we define a confined arena with Arena.ofConfined(). This arena will determine the lifetime of the memory we allocate. Then, we allocate a memory segment for our double array using allocateFrom, and pass it to our Rust interface:

public static String doubleArrayToStringRyu(double[] array) {

String output;

try (Arena offHeap = Arena.ofConfined()) {

// allocate off-heap memory for input array

MemorySegment segment = offHeap.allocateFrom(ValueLayout.JAVA_DOUBLE, array);

output = segmentToString(java_interop_h.doubleArrayToStringRyu(segment, array.length));

} // release memory for input array

return output;

}

The allocated memory segment is automatically freed by the arena once we reach the end of the try block.

Let's see how this array function with Project Panama compares to the other methods:

Compared to our Java-only solution, we are now more than twice as fast! Project Panama remains the most performant FFI method, although the difference between the FFI methods is smaller here because we have relatively less overhead from FFI when doing more work per call.

Final thoughts

Calling a function from a native library adds some performance overhead. This means that unless you are processing lots of data or doing lots of expensive computations, using an FFI is usually not worth it when it comes to performance. However, FFI can still be a useful tool to get access to libraries from other programming languages or to be able to migrate a large code base gradually to another language like Rust.

JNI requires us to use Java types on the Rust side, and allows us to interact with the Java environment via its interface. While this does require us to write JNI-specific code on the Rust side, it does make it convenient to deal with Java objects such as arrays, and it gives us precise control over how we interact with the Java environment.

On the other hand, JNR-FFI and Project Panama use a generic C interface on the Rust side. This means we won't have to write a Java-specific interface, which makes it easy to work with existing libraries that already expose a C interface. It does, however, also mean that we have to be careful with how we manage memory on the Java side to prevent memory leaks.

Of the three methods we tried, Project Panama gives the best performance. It is also convenient to use with its automatically generated bindings using cbindgen and jextract. Project Panama is still in development and requires a recent JDK version, which might be a drawback for Java projects that are stuck on older JDK versions.

We suspect that Project Panama will become the main preferred FFI method for Java. While dealing with C types can be a minor inconvenience, the memory issues they bring along can be resolved with small wrapper functions. Ideally, a dedicated tool like CXX (as seen in our C++ interop blog) could be developed in the future that combines cbindgen and jextract to generate a safe interface between Rust and Java. But for now, both JNI and Project Panama already offer great options to integrate Rust in your Java or Kotlin project.

The code used for the various benchmarks discussed in this blog post is available on GitHub. In a future blog, we may take a look at UniFFI, another tool for generating FFI bindings from Rust for Kotlin and various other programming languages.

Introducing Rust in your commercial project?

Get help from the experts!

- reduce first-project risk

- reduce time-to-market

- train your team on the job