Instant data retrieval from large point clouds

The Dutch government offers the AHN [1] as a way to get information about the height of any specific place in the country. They offer this data by using a point cloud. That is, a large set of points with some additional meta information. With the current version of the AHN the resolution of the dataset is about eight points per square meter. This results in about 2.5TB of compressed data for the relatively small area of the Netherlands. While this is something that is not impossible to store locally, it does offer some challenges.

Pointcloud size

Firstly, if we ever wanted a larger area covered, or if the resolution would increase even further, the dataset would quickly increase to such a size that local storage is no longer practical. Secondly the size of the dataset does mean quite a lot of waiting is required just to get a local copy, which doesn't help when a developer wants to try some experimentation.

The final issue we had was actually extracting a small area of such a point cloud to work on. For most of our experimentation we wanted to work on areas the size of a small house or even a single tree. However, the AHN dataset is downloaded as large areas (6.25x5km), for which a compressed point cloud is provided in the LAZ file format[2]. Unfortunately the data format used is not indexed nor is it ordered, which means having to scan the entirety of such a block in order to extract a specific small area from it.

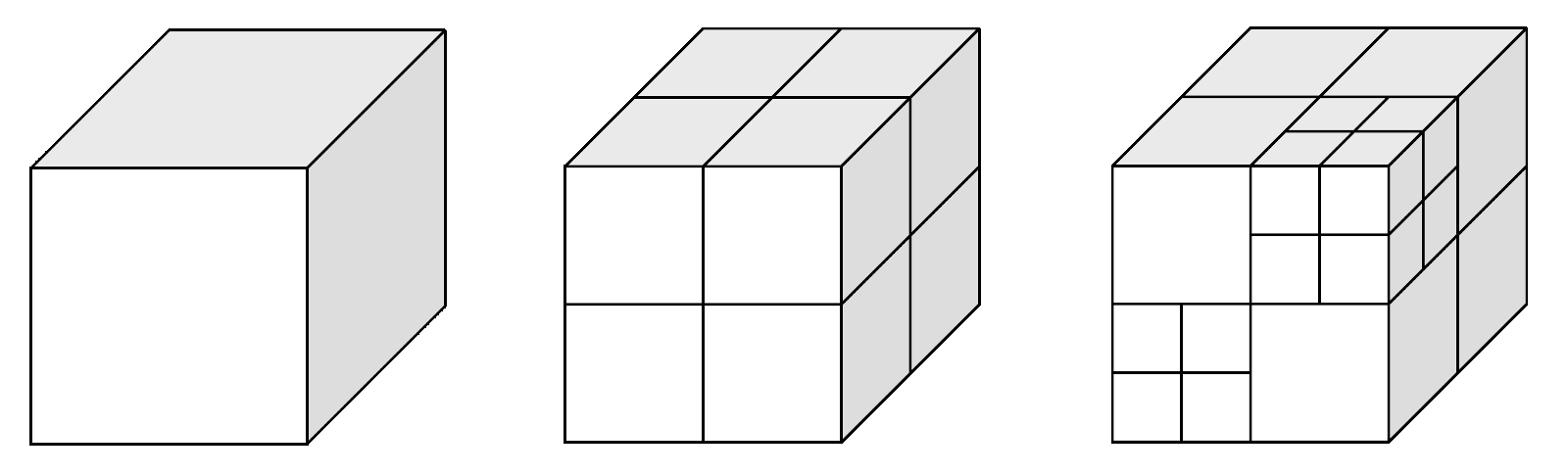

Octrees and Entwine

We opted to split the entire dataset up into much smaller blocks using entwine[3]. Entwine offers the EPT format, based on an octree [4] of small LAZ files. For our purposes this means that we can now host all these small LAZ files on a central server, and we now only need to download a few small LAZ files to quickly get all the points for a small area.

PDAL[5], a library for working with pointclouds, can actually do this for us already, but we noticed that PDAL isn't very fast, and we wanted to iterate more quickly on our data. This is where we decided to use LASzip[6] (a LAZ library) and Rust[7] to create a fast client ourselves.

Keeping it in memory

While working on our client we noticed one thing about LASzip we really did not like: the external C interface that the library offers only allows reading from and writing to files. We however just downloaded a LAZ file from the internet, and having to store it on disk before reading it's contents for points we need and then immediately removing the downloaded file is not very efficient. We made a patch for LASzip[8] that allows reading and writing directly from memory.

With the newly patched version of LASzip we started writing our own LASzip

wrapper for Rust, laszip-rs. Our main purpose of this wrapper was to make it possible

to easily iterate over all points in a LASzip file, and to make it safer to

create readers and writers, by hiding any manual resource management and by

catching any errors that occurred.

Performance

With our LASzip wrapper completed, we finally could test our new client. We did some comparisons with retrieval via PDAL. We took an area around our office of about 500m2.

- First we tried our true and tested method of downloading source files directly from the Dutch government, but a pipeline to extract the data for the area this way took several minutes at least.

- Next we switched to using PDAL to retrieve data from our entwine point cloud stored on Google Cloud Storage. This took approximately 1802 ms.

- Our retrieval tool managed to get this time down to 799 ms.

- With caching enabled, subsequent requests could even be completed within 450 ms. Compared to PDAL this is less than 25% of the time.

While 450ms is not instant, it is fast enough to be very useful in most cases. Typically it will not be the slowest part of a data processing pipeline, and it will allow developers to quickly iterate using real world data, even in a prototyping phase.

Conclusion

Our developers will no longer need a local copy because of our new tool. They have an easily accessible remote point cloud thanks to Entwine, which even allows us to visualize the entire pointcloud without effort. Finally, we managed to extract tiny parts of the pointcloud relatively quickly, making rapid experimentation much easier.

The source code of our Rust wrapper and LASzip modifications, laszip-rs,

is available for anyone to use [9]. We will likely

publish more on point cloud data and related applications in the future.

Follow our blog or look out for anything related on

our github [10] account to keep up to date.

Resources

[1] Actueel Hoogtebestand Nederland

[2] LAZ

[3] entwine

[4] octree

[5] PDAL

[6] LASzip

[7] Rust

[8] patch for LASzip

[9] laszip-rs

[10] Tweede golf github