Three.js Collada to JSON converter

The Collada format is the most commonly used format for 3D models in Three.js. However, the Collada format is an interchange format, not a delivery format.

Interchange vs. delivery

Where a delivery format should be as small as possible and optimized for parsing by the receiving end, an interchange format doesn't have such requirements, it should just make the exchange of models between 3D authoring tools painless. Because Collada is XML it is rather verbose. And to parse a Collada, Three.js has to loop over every node of the tree and convert it to a Three.js 3D object.

Three.js' JSON format

For improved delivery we first looked at glTF. Unfortunately it wasn't without flaws in our implementations. Next we decided to try Three.js' own JSON format for delivery. JSON is less verbose and because it is Three.js' own format, parsing is done in a breeze. After some fruitless experiments with Maya's Three.js JSON exporter and some existing Collada to JSON converters, we tried our luck with Three.js' built in toJSON() method.

Every 3D object inherits the toJSON() method from the class Object3D, so you can convert a loaded Collada model to JSON and then save it to disk. We wanted to wrap this idea into a Nodejs app but the ColladaLoader for Three.js depends on the DOMParser, and there is not yet an adequate equivalent for this in Nodejs.

Three.js JSON converter

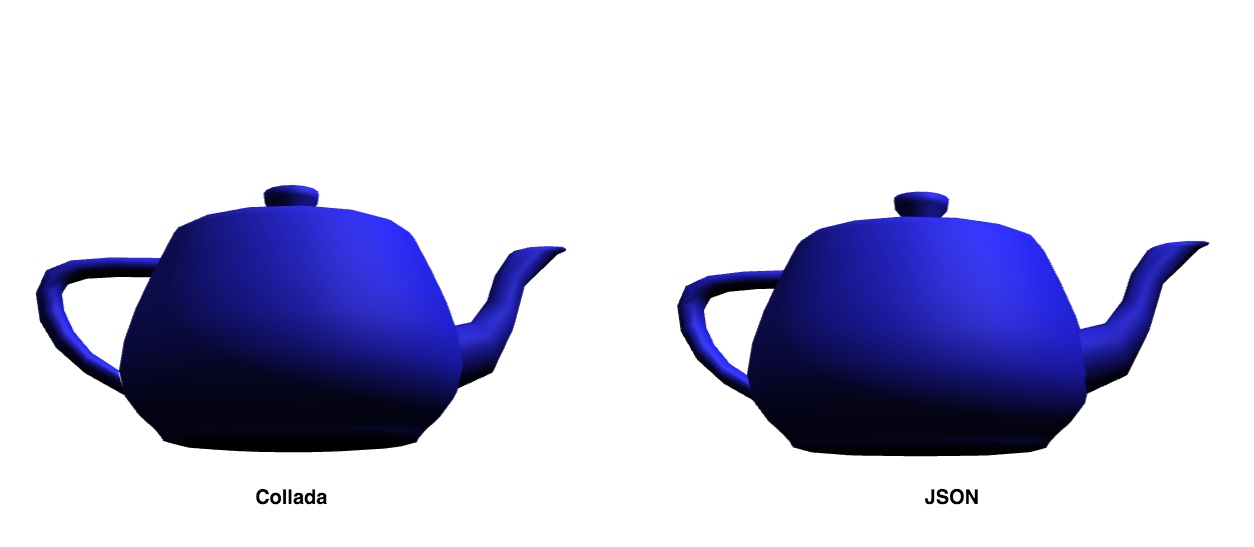

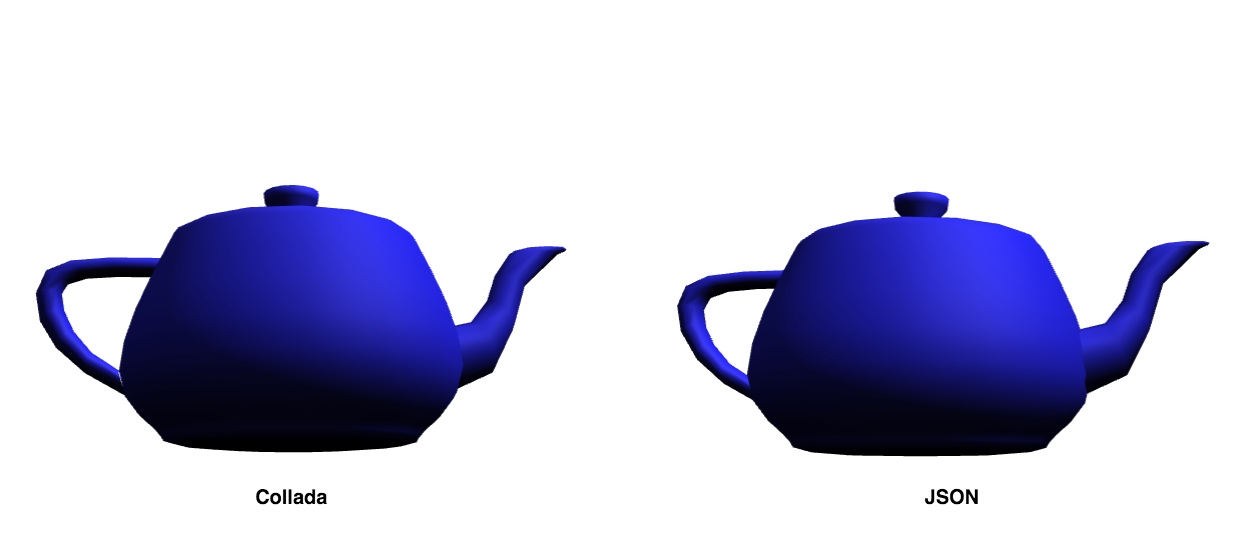

So we made an online converter. There are 2 versions; a preview version that shows the model as Collada and as JSON, and a 'headless' version that just converts the Collada. The first version is suitable if you want to convert only a few models and check the models side by side for possible conversion errors, a Collada to JSON preview. If you want to convert a large number of Colladas you'd better use the second version, a headless Collada to JSON headless.

All great teapots are alike

All great teapots are alike

How it works

First the Collada gets parsed by the DOMParser to search for textures. This is necessary because Three.js' toJSON() method does not include textures in the resulting JSON object.

We add the images of all found textures to the THREE.Cache object. By doing so we suppress error messages generated by the Collada loader.

Then we use the parse() method of the ColladaLoader to parse the Collada model into a Three.js Group, and because a Group inherits from Object3D we can convert it to JSON right away.

The last step is to add the texture images to the JSON file and save the result as a Blob using URL.createObjectURL. All done!

Code and links

- Collada to JSON converter preview version

- Code on Github preview version

- Collada to JSON converter headless version

- Code on Github headless version

- Boris Ignjatovic, our preferred 3D artist. Thanks for helping us find the best workflow!